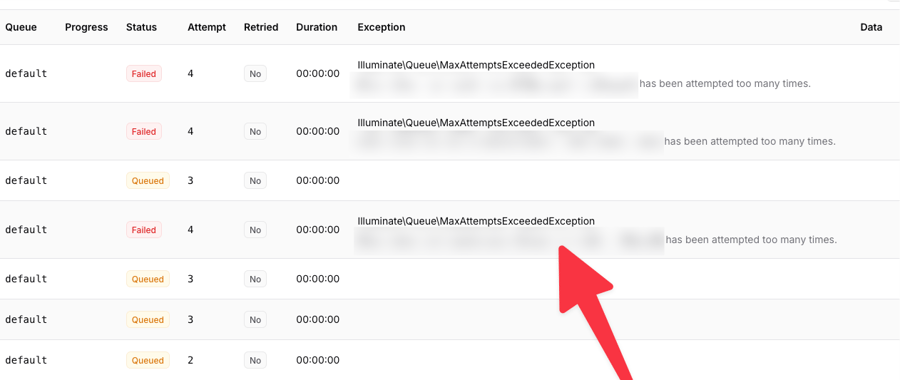

At Streamfinity, we recently ran into an issue where the Job Queue would stop processing dispatched jobs and just fail them with a MaxAttemptsExceededException.

The Situation

With our analytics and stream tracking at Streamfinity, we are processing hundreds of thousands data points each day. Presumabily in every analytics assembly job, we are aggregating around 50.000 data points.

So as you can imagine those are long running jobs with non deterministic execution time.

Thus, we need to make sure that only a single job is processing the data at a time. This can be achieved with the WithoutOverlapping middleware. As long as a job is being locked (right before it starts processing) using Laravel atomic locking, each following attempt to process the same job class will result in a MaxAttemptsExceededException.

public final class SampleJob

{

public function middleware(): array

{

return [

new WithoutOverlapping()

];

}

}

Because our jobs are long-running, errors can occur and jobs will fail. In case of failure or timeout, the lock will not be released and each following attempt of disaptching a job will also fail.

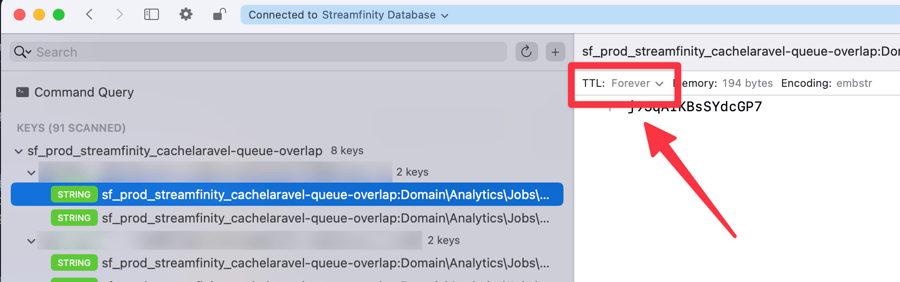

Solution

This is why you should always define an expiration time after which you can surely assume, the job has silently failed using the expireAfter() method.

public final class SampleJob

{

public function middleware(): array

{

return [

(new WithoutOverlapping())

->dontRelease()

->expireAfter(60 * 5), // in seconds,

];

}

}

Read more...

Get TTS with natural Voices on macOS without external Tools

You don't need fancy tool to get Text-to-Speech on macOS, even baked into Firefox

Stop all Adobe & Creative Cloud Processes on macOS via Script

Installing any Adobe Software on your computer comes with a huge load of bloatware. Stop all of this with a simple shell script.

Comments